Privacy is a really interesting subject, but some of its terminology can be difficult to access. That's why I want to share the nymity slider with you. The nymity slider is a tool to visualise the amount of identifiable information in an interaction. It allows us to assess the level of privacy in a transaction that's easy to grasp regardless of privacy expertise. We will see how the nymity slider can help in designing systems and identifying privacy risks, with a free worksheet at the end.

The concept of nymity originated in the idea that our interactions hold two kinds of information:

- Information exchanged between the participants of the interaction. This information is the content of an interaction. Think of the words in a text message you send or the payment information in a bank transaction.

- Information about the participants or the interaction, also called metadata. Metadata can be all sorts of things, but here we're focused on the identifying metadata. This is information like the location of the interaction, IP-addresses of the people involved, duration, etc.

Nymity is based on the second category, the metadata. It aims to measure the identifiable information surrounding an interaction instead of what happens in the interaction itself. The creator of the nymity slider, Ian Goldberg, defined nymity as:

Definition - Nymity. The amount of information in a transaction about the identify of the participants that is revealed.

Instead of interaction, nymity uses the more formal transaction to describe an individual interaction in a system. One interaction can have multiple transactions, like making a payment and receiving a receipt. The nymity level of two transactions in the same system can vary based on two factors: the context of the transaction and the metadata involved. The next two examples show how this works for each factor.

First is the situation where you use the same metadata, but change the context. A security analyst who wants to find hackers on a network will look at identifiable metadata to spot suspicious behaviour. Her manager only gets general reports about activity on the network, generated from the same metadata but without any identifiable information. The same metadata is used for both identifiable and anonymous transactions.

Likewise, you can change the nymity of a transaction by enriching the metadata. Suppose you use a loyalty card for your favourite clothing store. At first, you picked it up because of the free loyalty points. Later, you decided to register it to your email address to get further personalised discounts. The context has remained the same, namely using a loyalty card when paying in the store, but now there's more identifiable metadata tied to your purchases.

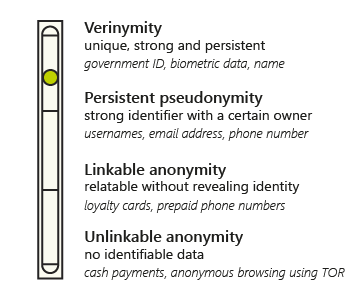

The nymity scale does not have fixed steps, but falls apart in roughly four levels:

Verinymity Verinymity is the top end of the nymity scale. Based on the word verynym, or true name, it's something that makes you stand out from the crowd. It could be your name, face, address or phone number. Just something that's likely to be unique to you. If a dataset is small enough, more abstract information could be used to identify you personally. If you are the only one in your street to work at a certain place, and we know that, then we can pick you out of a dataset with postal codes and workplaces. As such, it depends on the context if something is a verynym.

Goldberg names two important properties of verynyms: they are linkable to the person and don't change much. This can be good for systems that need to know your identity for security reasons, like a digital government service or online banking. At the same time, if this information is shared or stolen it could have problematic consequences for you.

Persistent pseudonymity We talk of persistent pseudonymity when we can relate different transactions to the same person with certainty. A persistent pseudonym is a strong form of pseudonymity, in that only the owner(s) of the pseudonym can do a transaction under that identity. Two common examples are email addresses and usernames. When you get two emails from dude92@example.com, you know that both came from the person or group of people with access to that email address. Usernames on a site like Reddit or online forums work the same. You can link specific messages to a pseudonym that only the owner(s) of that account can access. Pseudonyms can hide someone's true identity, but the process is often reversible and can lead to identification.

Linkable anonymity Linkable anonymity lets someone with the metadata link separate transactions to the same identity. It's anonymous, so the identity of the person itself is remains unknown. Customer loyalty cards are a good example of this. No matter how a person pays, by card or with cash, they can link transactions to the same customer. There's no need to know who the customer is beyond what they purchase, so the amount of identifiable information can be limited. Just the loyalty card number is enough.

Linkable anonymity differs from persistent pseudonymity in that we don't know anything about the person doing the transaction. A customer can have multiple loyalty cards, or swap them with friends. All we know is that we can link multiple transactions to the same loyalty card. Separating the pseudonymity and linkable anonymity can be difficult in practice though, as someone who uses a single loyalty card for a long time can shift from anonymous to pseudonymous and thus move up the nymity scale to somewhere in between the two.

Unlinkable anonymity At the bottom end of the nymity scale, unlinkable anonymity means that there's absolutely no identifiable information in the transaction. This makes it impossible to know if it's the same person performing two separate transactions, which is where unlinkable comes from.

A classical example for unlinkable anonymity is cash. You can pay with cash at the same store multiple times a month and nobody would be the wiser from the data. They can't identify you, nor know which transactions were done by the same person. Physical mail has similar properties. It's impossible to determine a common sender from two standard envelopes with printed addressing, with standard stamps and without a return address. Unless you happen to get police forensics on it, but that's (hopefully) a fantastical case.

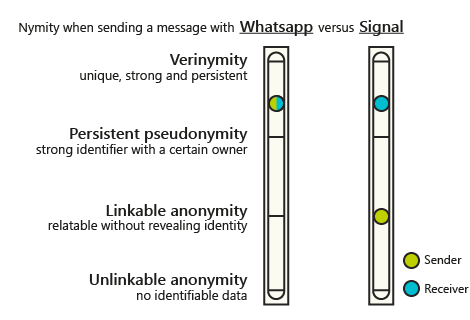

WhatsApp and Signal are two popular mobile messaging apps. Signal is often touted as being the more privacy-respecting of the two. We can test this statement by applying the nymity slider to the transaction of sending a message. Since the apps are too complex to fully pick apart here, we will only look at this feature.

WhatsApp and Signal look quite similar on the surface. Both apps use the same end-to-end encryption, which means that only the sender and receiver(s) of a message can read what it says. To others, it will look like random data that doesn't make sense. But we're not here for the contents of the message, we want metadata. That's where the difference is.

Let's start with WhatsApp. The app does not encrypt or obfuscate metadata. It sends it straight to Facebook's servers along with your encrypted message. Anyone at Facebook or its partners may have access to your identifiable information for all you know. Your user id, phone number, IP address, who you messaged, how often you message, it's all there. Because there's a lot of persistent identifiable information, we would place it close to verinymity on the nymity scale. When I use the nymity slider I tend to not put a phone number at very top, because we're not government id levels of sure it's actually you. In case of the metadata for WhatsApp, it also doesn't matter if you're the sender or the receiver.

Signal takes a slightly different approach. Using a technique called sealed sender, they are able to hide who has sent a message. This breaks an important part of the linkable information in the chain, because now we only know who received a message and at what time. That said, Signal still stores some identifiable information, like the IP address. IP addresses are not permanent, so messages only tend to be linkable for a short duration. But we can still link messages to the same sender this way, we just don't know anything else about their identity. This places the nymity for the sender somewhere at linkable anonymity. Don't forget that the receiver remains close to verinymity in this example, just like WhatsApp. You have to know where to deliver that message after all.

While the nymity slider is not an exact scale, this example shows how a single change in design impacts the identifying information in a transaction. It visualises that this transaction on Signal offers more privacy for the sender than WhatsApp does.

Nymity and system design

The first way we can use the nymity slider is when we design an information system. At this stage, we discuss what we want from a system and how we will build it. The interactions of different people with the system can have varying levels of nymity. If we look at the system as a whole, we can classify its nymity as the highest level of nymity in its individual transactions. And here we apply a general rule of thumb: moving up the nymity scale is easier than going down.

Moving up

One of the clearest examples of moving up in the nymity scale is Facebook. When it became a global phenomenon, all you needed to register an account was an email address and a password. As Facebook got more users they also attracted more bad guys doing evil things, like spamming or setting up scams. This prompted them to take stronger measures to verify identities. While the registration process still appears the same on the surface, Facebook won't wait long to ask you for a phone number to verify your identity. There's even cases where Facebook locks accounts until they provide a copy of government id, like those wanting to post political advertisements.

Moving up on the nymity scale may be good for security, but can have adverse impacts as well. I have been a non-user of Facebook for a long time, but at some point I decided to try spending €5 on advertising on the platform. I wanted to advertise a blog post about the privacy voting history of the European Parliament. Elections were coming up and maybe it could offer some insights. The advertisement was flagged as an advertisement about social issues, elections or politics. Fair enough, that's exactly what it was. To allow you to place such advertisements, Facebook wants a copy of your government id. I had privacy concerns because of their track record, so I decided against providing this highly identifying information. Moving up on the nymity scale was a tradeoff between verinymity and accessibility, with tangible financial consequences.

A higher nymity isn't always a bad thing, especially if moving up the nymity slider freely given consent. In the loyalty card example I used before, you had the option to register your email for personalised discounts. Say the store needs your email address to get the discounts to you. The move up in nymity is voluntary and doesn't restrict those who choose not to take it. If we had designed our loyalty cards to only work with an email address, moving to anonymous cards would likely have been more difficult to achieve.

Moving down

Moving down the nymity slider is possible, but often means changing the core design of a system. Verinymity can more easily be added on top. In the example of WhatsApp vs Signal, Signal chose to move down on the nimiety scale. They had to engineer an entirely new way of sending messages, the way used by every messaging app. This touched on the core of the app: sending and receiving messages. The sender went from verinymity to linkable anonymity, but only because Signal chose to invest in the technique's research and development.

We can see the same mechanisms in online payments. Credit cards and transaction systems (banks, PayPal, etc.) have a high level of nymity. This level of nymity is often mandated by law to guarantee the security of online transactions. In an effort to create anonymous transactions like cash in the physical world, people have been hard at work on various kinds of digital currencies. One such example is the EU with a digital form of the euro. They have to design completely new systems, because our current digital payment methods rely on identifiable information. A lower nymity is not something we can lay on top of that.

Because moving up in nymity is much easier than going down, a good principle for designing privacy-friendly systems is to aim for the lowest possible nymity. If you need a higher nymity because of new requirements, adding it is easier than taking it away. Mapping the nymity of transactions doesn't take too long (if you have diagrams available). They can facilitate a discussion about system design and where you'd be able to use less identifiable information. When used like this, the nymity slider can be a helpful tool when designing a system.

Nymity and privacy risk

As said in the beginning, the nymity slider is a good tool to quickly determine the amount of identifiable information in a transaction. That also makes it a good tool to help with the identification of privacy risks. On the high end of nymity, the risk lies in processing linkable and identifiable information. At the bottom side, the risks are in not knowing who you're dealing with. Let's consider them both.

Verynyms are great for security reasons. Verinymity benefits privacy when you want to ensure that personal data is only accessible to the right people: specific employees, the customer whose data it is, etc. One risk of verinymity lies in primarily relying on certain identifiable information, which can lead to cases like identity theft. Sim swapping is a good example. Like all digital crime, this form of identity theft saw a rise in activity during the 2020 pandemic. Popular media extensively covered the issue after several attacks to steal people's Bitcoin. While it's a highly targeted attack, the premise is not that difficult. The most common form of sim swapping is social engineering, where someone pretends to be you or your partner on the phone with the telephone company's helpdesk. The scammer persuades the helpdesk to send them a new sim card by offering some easily found identifying information, like birth dates, full names, current address, etc. A scammer will often add simulated stress in the mix, like sounds of a crying baby or faking a panic attack. Many online services rely on phone numbers to verify your identity, perform password recovery or use SMS for two-factor authentication. Being the owner of a phone number is often considered a verynym, and with a shiny new sim card the scammer now owns that identity. If a person knows your verynyms, they can pretend to be you and none would be the wiser.

The risk for unlinkable anonymity is the complete opposite. Unlinkable anonymity is good for privacy and works well for some cases, like cash. However, is many cases you want to know who you're dealing with. If you can't tell if two messages that look similar are from the same author, you may have a problem. That sounds quite abstract, but it's a real issue we already have to deal with. Think of deepfakes: digitally generated videos that look and sound like someone else is speaking in them. Deepfakes of political figures, like Obama, have already appeared and people have fallen for them. A video of someone speaking used to be good enough to guarantee their identity. With new technology, the nymity of a video has decreased. Additional systems exist to verify the authenticity of a video, like a post from an official YouTube channel or digital signatures, but they are not in the common conscience yet. In time, we'll need new ways to verify the identity of the speaker in a video and bring it back to a nymity level where we can trust who we see.

The above also shows us the limits of the nymity slider. A given level of nymity does not inherently carry risks with it, but nymity may warrant looking at certain privacy risks. Risks like linkability, identifiability, non-repudiation and detectability are all more likely when nymity increases. However, these may not be considered risks depending on the specific context. Likewise, a higher nymity may benefit the privacy of a transaction. We all want our bank to know it's actually us when someone requests to take money out of our account. The nymity slider informs us of the nymity of a transaction and can help guide the identification of relevant privacy risks.

Nymity slider worksheet

The nymity slider is a really cool tool. It helps communicate a complex concept in a way that's visual and easy to grasp. Grab the nymity slider, look at the system you're working with and off you go. I've made a worksheet to get you started with using the nymity slider in your organisation.

Nymity worksheet (EN) Nymity werkblad (NL)

Key references

Goldberg, IA (2000). A pseudonymous communications infrastructure for the internet. University of California, Berkeley. Retrieved from: GNUNet